Juba mitu nädalat on meedias kõneaineks eKooliga seonduvad teemad. Seda nii puht tehniliselt (ei tööta, on aeglane, arusaamatu) kui ka puht olemuslikult – kas ikka on vaja kõike raamidesse suruda ja kirja panna?

Isiklik kokkupuude ekooliga mul puudub ja seega avaldan arvamust puht internetist loetu ja tuttavate kirjeldatu põhjal.

Probleemid tunduvad saavat alguse sellest, et eKooli, mis on oma turul sisuliselt monopoolne tegija, haldab ja arendab Koolitööde AS, mis on erafirma.

Turumajanduses on üldiselt iga firma primaarne eesmärk teenida oma omanikele võimalikult suurt kasumit. Toimiva konkurentsiga turul ollakse sunnitud selle eesmärgi täitmiseks arvestama ka tarbija ootuste ja soovidega, sest muidu läheb tarbija lihtsalt konkurendi juurde. E-kool on Eesti turul monopolses olukorras ja on äärmiselt ebatõenäoline, et sellise väikse turu pärast ka keegi konkureerima hakkaks. Seega ei ole neil ilmselt ka erilist põhjust oma klientide soosingu pärast väga vaeva näha.

Koolide jaoks on valik hetkel seega põhimõtteliselt kas ekooli kasutada ja loota et ajakirjanduse kaudu survet avaldades tehakse midagi siiski nende seisukohalt paremaks, mitte kasutada või luua oma süsteem (mida ka osad koolid teevad).

Praegusest mudelist tunduks oluliselt loogilisem, kui kõik koolid kes on oma süsteemi arendamise peale juba välja läinud ei ponnistaks omaette vaid ühendaksid jõud MTÜ või SA moodustamisega, mis siis hakkaks uut eKooli looma.

– Kuna arendaja kuuluks sellisel juhul koolidele endile oleks neil ka motivatsioon luua töövahend, mis on võimalikult mugav, kiire ja tööd lihtsustav.

– Langeb ära vajadus maksta kinni omanike kasumit, seega peaks lahendus tulema odavam kui praegune.

– Peaks tulema ka oluliselt odavam, kui igaühel oma süsteemi teha.

Ideaalis võiks sellise süsteemi disain olla võimalikult hajus ja modulaarne.

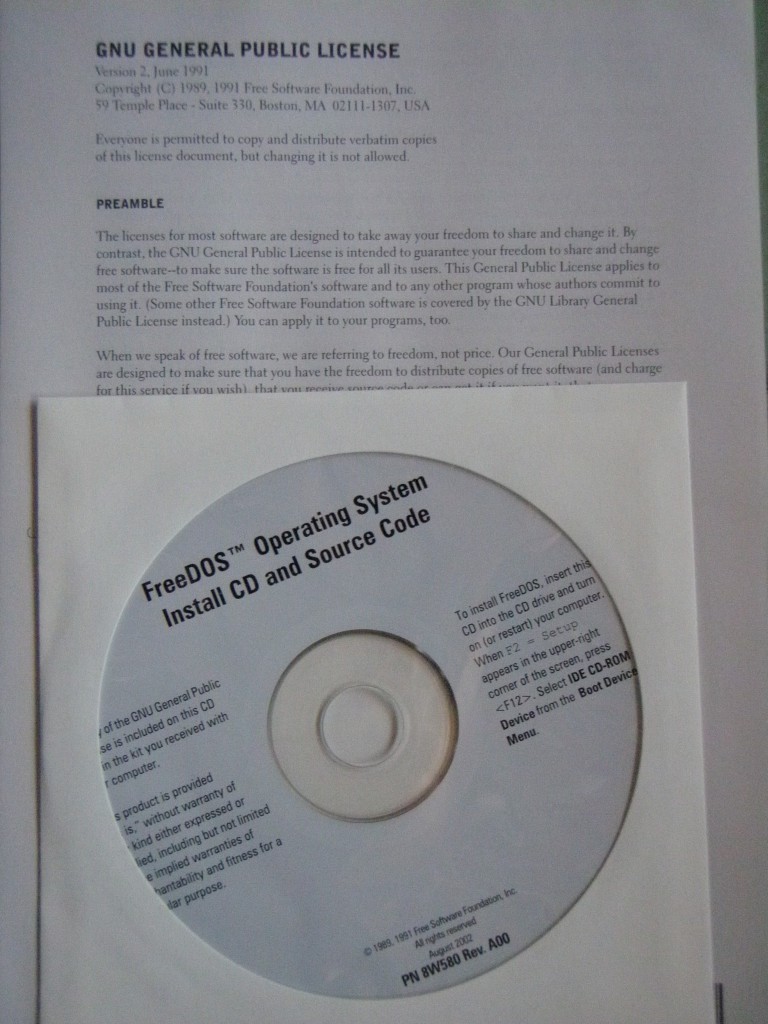

Keskne server tegeleks ainult kasutajate autoriseerimisega aga soovi korral võiks iga kool reaalse rakenduse paigaldada ka kuhugi mujale (tekiks hostingupakkujate vahel konkurents, mis hinda alla suruks). Iga kool saaks seega teatud piirides otsustada, mis versiooni ja mis moodulite, väljanägemisega (skin) ta kasutab. MTÜ tegeleks kasutajatelt tagasiside kogumisega, olulisemate moodulite arendamise ja tsentraalse autentimissüsteemi haldamisega. Rakendus võiks olla avatud lähtekoodiga, nii et kõik asjaosalised saaksid lihtsa vaevaga teha parandusi ja luua soovi korral lisa mooduleid, mis on just neile olulised.

Võibolla ei tule isegi tühjalt kohalt alustada, kuna enamvähem analoogsete eesmärkidega avatud lähtekoodiga rakendusi on üle maailma mitmeid (näiteks Schooltool, Moodle).

Osad neist võimaldaksid suht lihtsa vaevaga liikuda e-hinnetelehe funktsionaalsusest reaalse e-kooli funktsionaalsuseni.

Arvestades, et praeguse eKooli puhul on mitmed asjad pikalt olnud ingliskeelsed tekib kahtlus, et ka nemad on selle lahenduse teinud millegi olemasoleva kohandamise teel.

PS. huvitav kellele see Koolitööde AS tegelikult kuulub? Ilmselt küsimus mu oskamatuses aga poole tunniga ma ammendavat vastust leida ei suutnud.